Building Trackstarter: an AI-powered songwriting assistant

September 27, 2024 / Steve MacKinnon

I recently published Trackstarter, an AI-powered songwriting app that generates unique chord progressions and melodies to inspire songwriters. These musical ideas are played using virtual synthesizers running in the browser, and can be downloaded as MIDI files to turn into full songs in a Digital Audio Workstation (DAW). This post is the story behind building Trackstarter, and how I was able to leverage ChatGPT and the open source JavaScript ecosystem to rapidly accelerate prototyping and building this app.

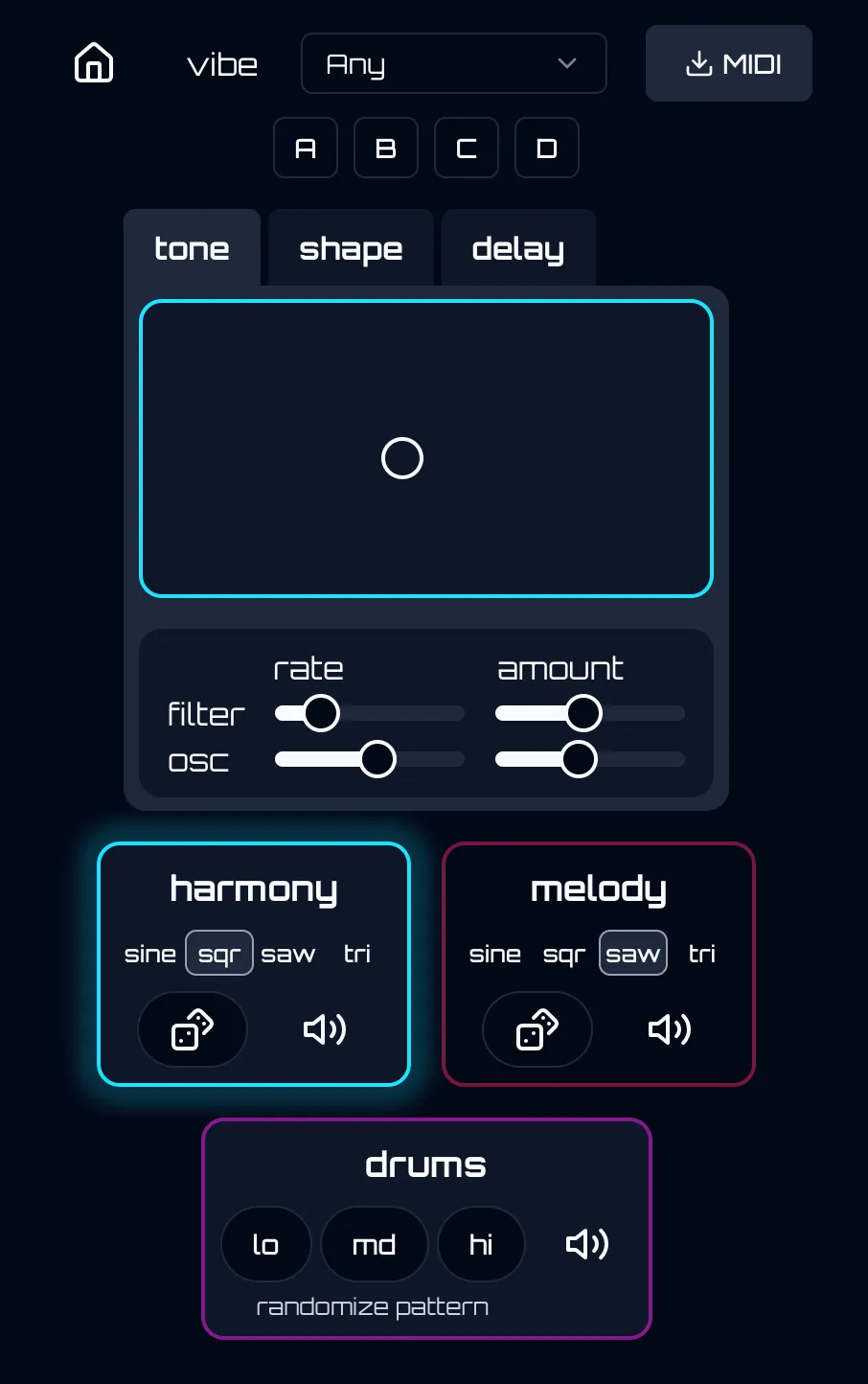

The Trackstarter interface

The Trackstarter interface

Trackstarter is written in TypeScript using Next.js/React, but the code examples provided are framework agnostic. I’ll focus on the interesting stuff - the logic driving chord progression and melody generation. If you’re curious to learn more how these are integrated into a React app, how the UI works, or anything else, you can dive into the application source code on GitHub here.

Inspiration

Trackstarter was inspired by my own bout of writer’s block. As an electronic music producer, I found myself bored with the same old scales and chords I usually reach for. Naturally, as one does in 2024, I asked ChatGPT to help me out.

Can you list some exotic musical scales that I could use to create a compelling dance music track?

GPT replied with an impressive list of ten different scales using the following format:

Hirajoshi Scale (Japanese)

- Formula: 1, 2, ♭3, 5, ♭6

- Notes in C: C, D, E♭, G, A♭

- This scale has a bright yet mysterious sound, often used in traditional Japanese music. Its pentatonic nature makes it ideal for dance music, as it’s less complex but still exotic.

However, simply having a list of scales didn’t solve the problem for me — playing them back on my MIDI keyboard felt uninspiring (I’m a terrible pianist).

💡 the big idea strikes: what if I have ChatGPT generate a list of scales and chord progressions categorized by mood, then build a tool that can quickly audition different scales in order to find some inspiration. Seems reasonable. I jotted down a list of moods, and sent them over to my pal ChatGPT:

Can you give me two TypeScript objects. One should map moods to arrays of relevant chord progressions. The other should map moods to scales. Here are the moods:

type Mood = | "Uplifting" | "Dark" | "Exotic" | "Mysterious" | "Dramatic" | "Sophisticated" | "Dreamy" | "Groovy" | "Surreal";

Response from ChatGPT:

export const MOOD_TO_PROGRESSIONS = {

Dark: [

"1-b6-b7-5",

"1-4-b6-5",

"1-b2-4-5",

"6-5-4-3",

"1-3-b6-b2",

"1-b3-b7-b6",

"1-b5-4-b2",

"1-b7-b6-5",

"1-b7-b6-4",

"1-b3-4-5",

],

// ...repeated for each mood

export const MOOD_TO_SCALES = {

Uplifting: [

"Major",

"Lydian",

"Mixolydian",

"Ionian",

"Pentatonic Major",

"Dorian",

"Major Blues",

"Lydian Augmented",

"Major Bebop",

],

// ...repeated for each mood

Here, the progressions indicate the position of the root note of each chord within the scale (1-indexed).

At this point, I had the foundational data needed to drive the Trackstarter experience. Next up: how can we turn this into music?

Making Sound 🔊

Before going deep on sequence generation, I wanted to setup a system for playing back audio to verify the generated sequences were sounding correct. Prior to working on Trackstarter, I had started writing a library that acts as a declarative interface in front of the WebAudio API. WebAudio is powerful, but delegating the details of how to handle sequencing, connecting nodes, etc. would help reduce complexity in the Trackstarter application code.

I’d written this mostly for fun to mimic the concept of React’s reconciliation process, but for an audio graph instead of a UI tree. Credit for this concept goes to Nick Thompson, who wrote Elementary Audio - a JavaScript DSP library that can runboth in the browser and in native apps (audio plug-ins, daws, etc). If you’re into audio dev, I’d highly recommend checking it out!

In my declarative WebAudio library, the AudioGraph class accepts an object that describes an audio graph, and it takes care of adding, removing, updating, and connecting WebAudio nodes under the hood. For example, here’s how Trackstarter sequences notes and plays them back with a sawtooth oscillator filtered by a lowpass filter. The structure may look familiar if you’ve worked with React components before.

const sequence = [

{ note: "C3", startStep: 0, endStep: 4 },

{ note: "D#3", startStep: 4, endStep: 8 },

// etc..

];

audioGraph.render(

// Each node accepts props describing the node's parameters, and an array of child nodes.

output(undefined, [

sequencer({

destinationNodes: ["harmony-osc"],

notes: sequence,

length: 64,

}),

filter({ type: "lowpass", frequency: 900, q: 10 }, [

// Here, we have a sawtooth oscillator, connected to a filter, then finally to the output.

osc({

key: "harmony-osc",

type: "sawtooth",

}),

]),

]),

);

In the Trackstarter application code, I have a React hook that UI components use to call audioGraph.render() with an updated state when the user generates a new sequence or adjusts parameters. If you’re curious, you can read the code for the useRenderAudioGraph hook here.

Building The Chord Progression Generator

The first problem I tackled was figuring out how to take a scale and chord progression and turn it into a sequence of notes that could be played in a browser. I was picturing the flow for randomly generating a chord progression would look roughly like:

- Take a

Moodas input. Randomly select a root note, scale, and chord progression using theMOOD_TO_SCALESandMOOD_TO_PROGRESSIONSmaps discussed earlier - Determine the note at each step in the scale (e.g. C Major => [C, D, E, F, G, A, B])

- Build each chord by “stacking thirds”. Start with the root note of the chord, then append the note two degrees up the scale to the chord; repeat

ntimes wherenis the length of the chord. - Determine the start and end times for each note. This is our sequence.

Step 1 was straightforward. I wrote a helper function for picking a random value from an array, then used that to pick a random root note, scale, and chord progression:

function getRandomValue<T>(array: readonly T[]): T {

const index = Math.min(

array.length - 1,

Math.floor(Math.random() * array.length),

);

return array[index];

}

// Example usage:

const mood = "Uplifting";

const rootNote = getRandomValue(NOTES);

const chordProgression = getRandomValue(MOOD_TO_PROGRESSIONS[mood]);

const scale = getRandomValue(MOOD_TO_SCALES[mood]);

For step 2, I needed to figure out which notes are in scale using rootNote as the root. My first thought was to:

- Create arrays of notes for each scale using C as the root note

- Write some code to transpose the scale up or down as needed (since we want to play back scales with arbitrary root notes)

That seemed feasible, but I wondered if there were any open source projects that could help out with this. After a quick Google search, I discovered that the kind contributors to Tonal.js have already solved this problem!

// Code snippet from the Tonal.js README

Scale.get("C major").notes; // yields ["C", "D", "E", "F", "G", "A", "B"];

Equipped with the lovely Tonal.js, we can now easily get an array of notes for a root note and scale:

const octave = 3;

const scale = Scale.get(`${rootNote}${octave} ${scale}`).notes;

// scale => ["F#3", "G#3", "A#3", "B3", "C#4", "D#4", "E#4"]

Onto step 3: building some chords. To do this, we need to build an array that includes every other note from the scale starting at the root of the chord. Easy enough, but there are a couple gotchas:

- Notes that wrap around the end of the scale should be shifted up an octave

- Alterations that flatten the root note should be handled correctly

Here’s what this looks like in Trackstarter:

function chordForScale(

scale: string[],

rootDegree: ScaleDegree,

chordLength: number,

): string[] {

return Array.from({ length: chordLength }).map((_, i) => {

const noteIndex = rootDegree.index + i * 2;

const note = scale[noteIndex % scale.length];

const SEMITONES_PER_OCTAVE = 12;

const octaveShift =

Math.floor(noteIndex / scale.length) * SEMITONES_PER_OCTAVE;

const alterationShift = rootDegree.flatten ? -1 : 0;

// The Note object from Tonal.js supports transposition:

return Note.transpose(

note,

Interval.fromSemitones(octaveShift + alterationShift),

);

});

}

Finally, for step 4, I mapped the notes from each chord onto a timeline. In Trackstarter, each chord progression includes four chords that each play for four beats. Each sequencer step represents a sixteenth note, and there are four sixteenth notes per beat:

function chordProgressionToSequencerEvents(

progression: string[][],

): SequencerEvent[] {

const events: SequencerEvent[] = [];

const STEPS_PER_CHORD = 16;

progression.forEach((chord, chordIndex) => {

chord.forEach((note) => {

events.push({

note,

startStep: chordIndex * STEPS_PER_CHORD,

endStep: chordIndex * STEPS_PER_CHORD + STEPS_PER_CHORD,

});

});

});

return events;

}

That’s a wrap for the chord progression generator! Next up: how to generate an accompanying melody.

Building The Melody Generator

Adding a melody to accompany the chord progression is where things get a bit more interesting. I’d been wanting to leverage Google Magenta for a music app project for years, and thought it might be the right tool for this job. Magenta includes an impressive number of machine learning models that specialize in different music generation tasks, and they have a JavaScript library, Magenta.js, that is capable of running inference for most models right in the browser using TensorFlow.js. You can learn more about Magenta here.

I browsed the list of Magenta music models, and stumbled across mel_chords, which was described as:

A 2-bar, 90-class onehot melody model with chord conditioning. Quantized to 2-byte weights.

Here, “90-class” refers to the number of events that the model can generate: 88 key presses (MIDI notes), a release, or a rest.

This sounded promising. I took a look at their demo project, and found demo code using this model:

const mvae = new mm.MusicVAE(MEL_CHORDS_CKPT);

await mvae.initialize();

// ...

const sample = await mvae.sample(4, null, { chordProgression: ["C"] });

writeTimer("mel-chords-sample-time", start);

writeNoteSeqs("mel-chords-samples", sample);

In this example, sample() is running inference and generating four different melodic sequences to accompany the provided chord progression (just a C chord). I liked the look of this, and decided to try it out in Trackstarter.

I ported the mel_chords demo code over to a utility function in my project that looked like this:

// Init the global Music VAE object

const mvae = new mm.MusicVAE(CHECKPOINT_URL);

mvae.initialize();

export async function generateMelodyForChordProgression(

chordProgression: string[],

): Promise<SequencerEvent[]> {

const NUM_SAMPLES = 1;

const sequences = await mvae.sample(NUM_SAMPLES, null, {

chordProgression,

});

return toSequencerEvents(sequences[0]);

}

This code generates a single melody for the provided chord progression, then converts it to an array of SequencerEvent objects that can be played back easily by the sequencer engine, which we’ll discuss later on. Looks easy enough! But, I ran into a few hiccups where the model didn’t recognize certain chords. For example:

Error: Unrecognized chord symbol: Dm/ma7

I noticed that the problematic chords were all slash chords - chords where the lowest note is not the root note. These were coming from chord progressions containing flattened root notes like 1-b6-b7-5.

As a test, I got rid of all of the moods that contained slash chords. ✅ success. I wasn’t able to reproduce the error after smashing the generate button dozens of times.

For posterity’s sake, I wrote a custom React hook to test whether MusicVAE successfully produces melodies for all possible cases. It iterates over every permutation of chord progression, scale, and root note, creates a chord progression, passes it to mvae.sample(), and verifies that no exception is thrown. In a perfect world, this would be an integration or unit test that runs in CI, but getting Magenta.js working in test harness proved to be a headache.

Now Magenta was producing melodies successfully, but sadly, the variety of moods produced by Trackstarter had diminished significantly…

After a little brainstorming, I realized I could simply omit everything after the slash in the slash chords:

// ["Dm/ma7"] now becomes ["Dm"]

chordProgression = chordProgression.map((chord) => chord.split("/")[0]);

With this hack in place, the test hook succeeded for all permutations! But, MusicVAE was producing melodies were often not in key with the chord progression that was played back (since we are lying about which chords are playing). Hack #2 to the rescue: since we know which scale the chord progression is in, we can simply snap the notes returned from MusicVAE to the scale:

function snapNoteToScale(midiNote: number, scale: string[]): string {

const note = Midi.midiToNoteName(midiNote);

// Map/reduce over the scale to find the closest note

return scale

.map((scaleNote) => ({

note: scaleNote,

distance: Math.abs(Interval.semitones(Note.distance(scaleNote, note))),

}))

.reduce((prev, current) =>

current.distance < prev.distance ? current : prev,

).note;

}

One downside to this approach is that it could override MusicVAE’s creative freedom to intentionally play notes out of key to introduce tension, etc. In my opinion, snapping melody notes into key generally yields more pleasant sounding results so I’m okay with the tradeoff.

Now, the melody generator was working pretty well, but it was frequenty making melodies that vamped on a single note. This was a bit boring. To try to make things more interesting, I make two tweaks:

- Requesting multiple melody options from

MusicVAE, and picking the one that had the largest variety of notes - Passing a slightly randomized

temperatureparameter tosample(). This controls how the activation function used by the neural net behaves. Higher temperatures result in more random outputs from the model.

const NUM_SAMPLES = 5;

const temperature = 0.6 + Math.random() * 0.25;

const sequences = await mvae.sample(NUM_SAMPLES, temperature, {

chordProgression,

});

// Choose the melody with the largest variety of notes

const sequence = sequences.sort((a, b) => {

// Constructing a Set from an array will capture only the unique values

const aUniqueNotes = new Set(a.notes!.map((n) => n.pitch!)).size;

const bUniqueNotes = new Set(b.notes!.map((n) => n.pitch!)).size;

// Descending sort

return bUniqueNotes - aUniqueNotes;

})[0];

This worked surprisingly well. With this change in place, the melodies generated by Trackstarter were sounding much more interesting.

Closing Thoughts

Some takeaways from building Trackstarter:

ChatGPT + rapid prototyping = ⚡🚀

ChatGPT is a game changer for quickly vetting ideas and rapid prototyping. I’m admittedly somewhat of a “shakes fist at cloud I don’t need AI to write my code for me” developer. But for Trackstarter, it’s ability to generate long lists of chord progressions and scales for different moods was invaluable and a massive time saver.

Building music apps in JavaScript = ❤️

The combination of JavaScript, React, and open source npm packages is hard to beat for building compelling music/audio apps and prototypes quickly. For Trackstarter, I was able to go from pnpm add @magenta/music to hearing AI-generated melodies in roughly 30 minutes. Try doing that in C++ and let me know how it goes!

I’ve been developing audio software largely in C++ for over a decade. C++ has a special place in my heart, but it is almost always not the right choice for building quickly. This is especially true when building an app from scratch versus adding on functionality to an existing native app.

A few small tweaks can have a large impact

For Trackstarter, I found that by having a (very crude) evaluation metric for “good melody”, I was able to greatly improve the quality of melodies delivered to the end user. In this case, it was as simple as asking for five different melody options, and picking whichever had the most unique notes.

And that’s a wrap! If you’ve made it this far, thanks for reading. Trackstarter has been a fun, fulfilling side project, and I’m finding myself using it more and more to spark creativity. I hope it helps other musicians find inspiration to write something new and different. If you’re curious, give it a try and let me know what you think. If you have any questions, comments, or feedback feel free to hit me up on X @stevedakomusic or LinkedIn

✌️ Steve